Materials, softwares and processes I can, can't, and can sort of use - Updated

Now I am coming to the end of my FMP, I have tried out loads of new things, become familiar with those I had only just tried, and even become more aware of what I am yet to learn. Here is an updated list of my skills with a key to help indicate what changes have occurred since undergoing my project.

Key

New entries that didn't appear on the list before

Things I could do that I am now more confident with

Things that have move from the 'tried' group to the 'can do' group

Things that have moved from the 'can't do' group to the 'tried' group

Things that have moved from the 'can't do' group to the 'can do' group

Things I can do/use:

- Draw

- Paint

- Microsoft Paint

- Final Cut Pro

- iMovie

- Make Flip-books

- Hand-drawn animation on acetate

- Photoshop

- Premier Pro

- Photography

- Flash Animation

- Make Zoetropes

- Lighting

- Creating soundscapes

- Stop-motion wireframe animation

- Developing Black and white analogue photographs

- Cinematography

- Using sound effects

- Scratch film/animation

- Making a camera obscura

- Mindmapping

- Moodboards

- Using questions to find answers

- Making circuits

- Arduino programming

- Prototyping

- Using sensors and smart materials

- 3D Animation

- Distorting images using grids

- Illustrator

- Unity

- Blender

- 3D Modelling (digital)

- Creating Textures

- Orthographic Drawing and blueprinting

- Using virtual cameras and lights in a scene

- Creating distortion and working in stereoscopic view

- App building

- Adobe bridge

- Creating virtual realities

Things I have tried but am not yet confident with:

- Making models

- Time Lapse

- After effects

- Making 3D zoetropes

- Creating characters

- Writing narrative

- Creating different effects and shapes when developing photographs

- Story boarding

- Creating comic strips/still animation

- Making a pin hole camera

- Making cameras/lenses

- HTML coding

- C# coding

- Javascript coding

- Working with electronics

- Step outlines

- Parallax effect 2.5D animation

Things I know that I don't know how to do/use:

- Colour Casting

- Foley

- Games design

- Augmented reality

- The internet of things

- Interactive environments

- Set design

- Prop making

- Costume design

- Flexible 3D modelling

- Cell animation

- Claymation

Key

New entries that didn't appear on the list before

Things I could do that I am now more confident with

Things that have move from the 'tried' group to the 'can do' group

Things that have moved from the 'can't do' group to the 'tried' group

Things that have moved from the 'can't do' group to the 'can do' group

Things I can do/use:

- Draw

- Paint

- Microsoft Paint

- Final Cut Pro

- iMovie

- Make Flip-books

- Hand-drawn animation on acetate

- Photoshop

- Premier Pro

- Photography

- Flash Animation

- Make Zoetropes

- Lighting

- Creating soundscapes

- Stop-motion wireframe animation

- Developing Black and white analogue photographs

- Cinematography

- Using sound effects

- Scratch film/animation

- Making a camera obscura

- Mindmapping

- Moodboards

- Using questions to find answers

- Making circuits

- Arduino programming

- Prototyping

- Using sensors and smart materials

- 3D Animation

- Distorting images using grids

- Illustrator

- Unity

- Blender

- 3D Modelling (digital)

- Creating Textures

- Orthographic Drawing and blueprinting

- Using virtual cameras and lights in a scene

- Creating distortion and working in stereoscopic view

- App building

- Adobe bridge

- Creating virtual realities

Things I have tried but am not yet confident with:

- Making models

- Time Lapse

- After effects

- Making 3D zoetropes

- Creating characters

- Writing narrative

- Creating different effects and shapes when developing photographs

- Story boarding

- Creating comic strips/still animation

- Making a pin hole camera

- Making cameras/lenses

- HTML coding

- C# coding

- Javascript coding

- Working with electronics

- Step outlines

- Parallax effect 2.5D animation

Things I know that I don't know how to do/use:

- Colour Casting

- Foley

- Games design

- Augmented reality

- The internet of things

- Interactive environments

- Set design

- Prop making

- Costume design

- Flexible 3D modelling

- Cell animation

- Claymation

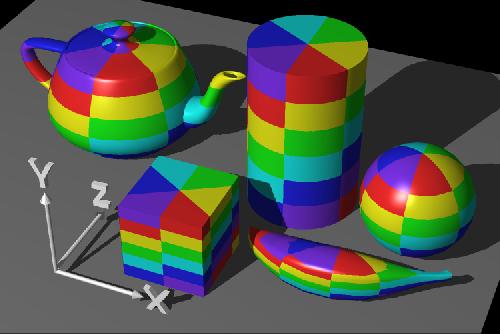

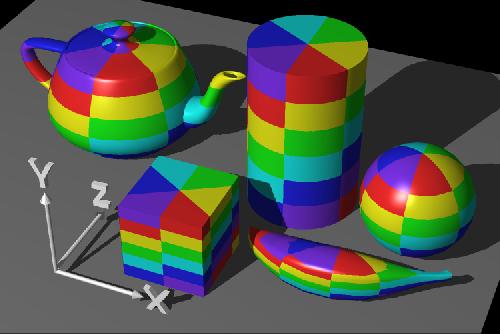

Here I have set out a list of the pros and cons of texturing in each of the programmes so that I am able to quickly look at the list and evaluate the best software and method for each situation. From this, I have concluded that for detailed, multi faced objects I should UV map my textures in blender before I import to unity to keep up that level of quality and detail. However, for very simple objects that I only need to texture a single face on, such as a ground plane, texturing in unity is faster and easier and gets the job done just as well.

Unity

Unity

Pros

- Simple click and drag

- No platform crossing

- No need to prepare object for texturing

- No different methods for colours, images etc.

- Can cycle through different textures

- Different rendering options

- Can use video files as textures

- Tiling capabilities

Cons

- No positioning ability

- Cannot use multiple textures on single child object

- Cannot re-size textures

- Cannot use animated gifs

Blender

Pros

- Fully positionable

- UV mapping capabilities

- Tiling capabilities

- Different shaders, renderers and baking options

- Any number of textures can be used on a single object

- Paintable

- Resizable

- Rotatable

- Multiple methods

- Can use videos as textures

- Tiling capabilities

Cons

- Platform crossing

- Extensive method

- Cant convert curve object to smart UV object

- Most objects require much preparation for texturing

- Different methods for each object/texture type and combination

- Can't use animated gifs as textures

For some elements of certain assets I am modelling, I have decided to use a basic sphere mesh as my starting point, eg. the crown of the clocks or the centre where the two hands meet. However, Blender threw me off slightly when I discovered there are two different types of basic sphere meshes. Because of this I did some research to help me find out what would be the best type to use for my purposes. The two types of sphere are UV spheres, and Icospheres. The major, fundamental difference between the two is how the spheres are modelled.

UV spheres use rings and segments, similar to latitude and longitude lines of the earth. Near the poles (the top and base on the Z-axis) the vertical segments converge. They are are best used in situations where you require a very smooth, symmetrical surface (the more subdivisions the better). This type of sphere is useful for projecting terrain onto planets and/or complex modelling as it is readily subdivided even after being created, and maps onto Mercator projections readily. However, they do relatively poorly when realistic topology is your key goal. UV spheres are not the best for organic natural shapes, because of their symmetry and the use of quads.

The icosphere uses a different approach. A polyhedron is made with triangular faces, which are placed as an isocahedron (hence the name) and more finely-subdivided solids. All faces have the same area, which may be useful for certain types of UV mapping containing non-organic textures. For example, an isocahedral die, or billiard balls where stretch must be minimised near the point where the number is printed onto the ball. Icospheres are best used for situations such as Geodesic Domes, Planets with realistic terrain and rough surfaces, such as golf balls. If symmetry is important to you however, stick with UV spheres, since Icospheres are not symmetrical.

As I would like my spherical elements to appear very smooth, with the lowest possible number of subdivisions due to needing to keep the file size down as I am rendering them on a phone screen, I have opted for working with UV spheres. The texture mapping on these particular parts will not be too complicated, so I do not require identical faces, and I am trying to use as many quadrilateral faces rather than triangular faces as possible to keep my shapes organic.

As Blender is a specialised 3D modelling programme, it has many different tools that you can use to create the shapes that you want. It works by creating 3D objects out of vertices, edges and faces. Modelling in this way is called 'Polygonal' modelling. A vertex is a single, 0D point in three-dimensional space; an edge is a 1D line connecting two vertices; a face is 2D shape connecting three or more vertices: all these together make up a 3D shape. Basic modelling involves creating and moving vertices to create desired edges, which in turn create desired faces, which make up the correct 3D shape, however, there are tools to make this process easier, faster, and more precise and accurate.

Here is a list of the most basic modelling tools available in blender:

- Positioning

- Scaling

- Rotating

- Cutting out

- Extruding

- Creating and deleting Vertices, Edges, and Faces

- Subdividing

- Filling

- Mirroring

Different models obviously require different modelling techniques, and with some of the more complex models I am creating, a combination of them is required. I will be using most of the aforementioned techniques in part, and more, at one time or another, but here are the main techniques I am using for my specific models.

Curve Modelling

Curve modelling has a very different starting point to most other techniques in the way that, as the name suggests, it starts by using a curve, or curves. I have been using this technique for a wide range of different objects, such as all of my 'flat' assets, most of the buildings and skyscrapers, and even the clock hands in my final piece. You start by adding a bezier curve to the scene. For these purposes, I keep the curve in two dimensions rather than three. By converting the handles to vector handles (and then free handles once they are moved) you are then able to control the length and position of the bends in the curve to outline any shape, even with sharp corners. Once the shape has been drawn out, apart from the last connecting piece, you create a 1D face between the first and last points, thus closing the shape. After this, you switch into object mode, and convert the shape from 3D to 2D; this fills it in. Once you have a filled in shape, use the extrude tool to make a prism of desired thickness out of the 2D shape. After the shape has been created, other tools may be applied, and before it can be textured it must be converted into a mesh object.

Mesh modelling

Mesh modelling can be considered the 'norm' of 3D object modelling. It begins with a 3D shape right from the offset, with the shape depending on the desired outcome, for example, a torus (For the rim of the 'dripping clocks') or a sphere (for the dials), or even a cube (for most other 3D shapes). Once your basic shape is in place, switch to edit mode, and position the vertices in three dimensions so that they make up the desired shape, subdividing any surfaces that require more vertices for increased accuracy.

Creating Faces

I used this technique when creating 2D elements (or planes) on my 3D shapes, for example, the faces of the 'dripping clocks'. Faces can have any number of points, but this does affects both the look and the nature of the shape. When there are too many triangular faces, an object tends to end up looking too 'angular', more geometric and less realistic. On the other hand, too many vertices on the faces reduces how much a shape 'bends' and leaves it looking too 'cubic'. Quadrilaterals are generally a good way to go as they are easy to work with, and tend to look better both left natural and after a subsurf modifier. Naturally, blender creates triangular faces when converting a curve object to a mesh object, which creates some problems.

|

Modifiers

Modifiers aren't strictly modelling tools, but changes you can make to an object post-modelling. There are many different modifiers available, but the three I have found most useful are the 'subsurf' modifier (All of my smooth-looking shapes), Curve modifier (the bodies and hands of the 'dripping clocks') and the boolean modifier (The cut out windows on some of the buildings). The subsurf, or subdivision-surface, modifier does what it says on the tin: it subdivides the surfaces on an object. In layman's terms, it turns each and every face on an object into many faces, angling them all so that, eventually, the object appears to have no flat faces at all, creating a completely smooth appearance. Curve modifiers are also named very explicitly: they curve your object. You use them by placing a curve in the scene and using the handles to angle it as desired. You then affect your object with a curve modifier, selecting the curve as the modifying object. Your object will then bend to match the shape of the curve, and you can reposition the object to make it curve in the right places. This can be done with 2D or 3D curves. The boolean modifier has multiple uses, but in my case, I used it to 'cut' into mesh shapes to remove sections to form doors and windows. You place mesh objects of the chosen shape into the scene and position them 'inside' your model. After affecting your model with a boolean modifier and selecting the objects you've placed inside it, the mesh that is obstructing the objects will be deleted. Once you move or delete the objects there will be 'holes' left in your mesh in the shape of them.

In order to create my virtual world, I need to 3D model my assets: in this case, objects and scenery. The programme I use to do this largely depends on what I am modelling, and what I need it to do.

Unity

Firstly, unity is not made for modelling. It is a game engine, but that doesn't mean it can't be used to make some of the physical components of my scene. 3D modelling in unity essentially consists of using the menu to create a 'GameObject': GameObject > 3D Object > (choose from list). There 6 simple physical mesh objects and 5 others to choose from. In terms of tools, you are only able to move, scale, and rotate objects across 3 axis'. Because of this, the extent of possible modelling ends at putting together pre-made mesh objects and scaling them to look as close to the desired shape as possible. However, it does have its uses. As unity mesh objects automatically have colliders, it can be used to create the bare bones of a scene, such as the ground and walls of buildings. Some of the preset objects are also incredibly useful, such as trees which are fully customisable and allow you to use wind zones to create realistic leaf-shake, and interactive cloth objects which are fully flexible and react naturally to physics.

Firstly, unity is not made for modelling. It is a game engine, but that doesn't mean it can't be used to make some of the physical components of my scene. 3D modelling in unity essentially consists of using the menu to create a 'GameObject': GameObject > 3D Object > (choose from list). There 6 simple physical mesh objects and 5 others to choose from. In terms of tools, you are only able to move, scale, and rotate objects across 3 axis'. Because of this, the extent of possible modelling ends at putting together pre-made mesh objects and scaling them to look as close to the desired shape as possible. However, it does have its uses. As unity mesh objects automatically have colliders, it can be used to create the bare bones of a scene, such as the ground and walls of buildings. Some of the preset objects are also incredibly useful, such as trees which are fully customisable and allow you to use wind zones to create realistic leaf-shake, and interactive cloth objects which are fully flexible and react naturally to physics.

Pros:

- No platform crossing

- Easy to use

- Automatic colliders, mesh renderers, and mesh filters

- Easy to use physics and wind zones

- Perspective/Isometric views

- Simple lights and cameras

- Multiple screens

- Global and local axis

Cons:

- Very limited shape customisation

- Very limited texturisation

- Few tools

- No built in export

- Single view mode

- Single mode (whilst creating)

Blender

Blender is a purpose built 3D modelling software, so by nature has many more functions than unity (when it comes to modelling). There are many techniques to creating your shapes but they mostly start with creating a 2D image with curves that you can then extrude or adapt into a 3D shape, or a cube or other basic mesh object that you can subdivide and edit the points to create the shape. You are able to use additive (like clay) or subtractive (like carving) methods, and there are many tools in place to make your job faster and easier. With this great functionality obviously comes great complications. There is essentially a different method of creation for each different type of object you'd like to create, and like most arts, would take a lifetime to master. There are also a few other issues, such as needing to manually add colliders to each object when importing it into unity in order for it to act solid, and any movement needs to be frame animated with an animation controller, so physics animations don't look quite as natural. Modelling in blender is best saved for complicated shapes and any objects that I would like to animate in their own right.

Blender is a purpose built 3D modelling software, so by nature has many more functions than unity (when it comes to modelling). There are many techniques to creating your shapes but they mostly start with creating a 2D image with curves that you can then extrude or adapt into a 3D shape, or a cube or other basic mesh object that you can subdivide and edit the points to create the shape. You are able to use additive (like clay) or subtractive (like carving) methods, and there are many tools in place to make your job faster and easier. With this great functionality obviously comes great complications. There is essentially a different method of creation for each different type of object you'd like to create, and like most arts, would take a lifetime to master. There are also a few other issues, such as needing to manually add colliders to each object when importing it into unity in order for it to act solid, and any movement needs to be frame animated with an animation controller, so physics animations don't look quite as natural. Modelling in blender is best saved for complicated shapes and any objects that I would like to animate in their own right.

Pros:

- Can model any shape

- Many modelling tools for multiple techniques

- Customisable views

- Perspective/orthographic views

- UV texturising options

- Render and bake ready

- Customisable lights and cameras

- Wireframe, solid, texture etc views

- Global, local and more axis'

- Many export options

- Multiple modes

- Multiple screens

Cons:

- Platform crossing

- Complicated

- Have to change settings upon import

- Must add colliders, rigidbody, mesh renderers and filters

- Aren't compatible with physics and wind zones

- Require controllers

Unity is a software that is used for creating most modern games. It can be used to include an interactive component into a 2D or 3D animation, or can create things from scratch. I would like to use it to add the interactivity to my final piece: namely, headtracking, movement, and 3D imaging - possibly even more once I develop my ideas. I created a very simple environment on unity to allow myself to work out how to add these interactions. Firstly, to enable head tracking, I had to download an SDK (software development kit) that was used to create smartphone based virtual reality apps. The SDK allows the environment to respond to readings taken from the accelerometer in the smartphone along 3 axes so that it can tell exactly where a person is facing and smoothly change the image on the screen accordingly. Whilst working on a computer to create the environment, unity uses the mouse as the means to control the head tracking, and once I had downloaded the SDK I could programme the game to use the readings from the accelerometer as if they were the computer mouse. I then worked on creating the movement. In unity, you can select a pre-made first person character. This means that it is automatically controlled by the arrow keys and space bar to allow it to move, and comes with a camera in the 'face' so that the game is viewed from its point of view. Once I had selected this character I was able to manipulate it using the same SDK to create the 3D imaging. By moving the pre-set camera 0.5 units to the left, and creating an identical camera set 0.5 units to the right (making them around eye-width apart) I was able to mimic the sensation of depth perception by putting the views from these cameras next to each other, making the image appear 3D. I then added distortion to the images to correct for that created by looking at the images through the lenses of 'google cardboard'.

SoundSound makes a huge difference to immersive pieces, as it adds in an extra sense to engage the user. When an audience member is blocked off from the real world by both not being able to see it or hear it, they are much more in the moment and the virtual reality feels a lot more real. For the sound in my piece, I created a non-linear looping soundscape. By this I mean that each individual clip loops, but as each clip is a different length the sound is continuously different. I did this by using unity's 3D sound capability. I assigned the correct sound to each of the objects that would make said sound. I then edited the settings for each to make the sound as realistic as possible. The individual objects each had a pan level of 1 and a spread of 0. This means that panning is on maximum and the sound will change noticeably as you turn towards and away from the object creating that sound. As I will be playing it on surround sound headphones, if the object making the sound is directly to your left, you will only hear it in your left ear, and as you turn towards it it'll spread out. The sea has four audio transmitters, each with a pan of 1 and a spread 180. this means that each audio listener can be heard from 180 degrees around it, or, a straight line across. As i have four, each of them on an edge of the square base that meets the water, the sea sounds like it is coming from all directions, but is fairly distant. I also used two separate sea noises so that it subtly changes as you turn on the spot. I also left each one with a slight doppler effect, meaning that the sound will warp realistically as you move away from it.This won't be noticeable to the average human ear, but will heighten the realism and there for the immersion.

Computer Animation

I have been using animation as the basis of my whole project, from creating test pieces to using it as a texture to making animated elements for my final piece. Earlier I looked at stop motion animation, and some of this is fairly similar in theory, but very different in technique. Firstly, I used flash animation to create an animation plan for my final piece. This is a type of 2D animation that is entirely time based, and uses a vectorised drawing tool. Because of this I can transfer the skills I picked up doing my orthographic drawings to drawing in flash. For this piece I drew out the key frames, and then went back and forth doing the inbetweens until it was satisfyingly smooth. In blender this is not possible as it automatically tweens between your keyframes for you, so if it following a wrong path it is very hard to go back and forth fixing it as it causes your object to 'jump'. Also, in blender you can have different time sequences for different components in the same animation, for example, I only did 44 frames of aniamtion for the minute hand of the clock, and then used an F-cycle to continue it, making it loop, whilst I did 516 frames of animation for the hour hand. In flash you would have to copy and paste out the frames that you wanted repeated, and then hope they ended at the exact same time. The animated texture I used for the water was a different story altogether. That texture is just a single image, or frame, and is tiles to repeat itself many times across the plane. It is then controlled by a code that allows me to set the speed and direction of the movement, creating the illusion of waves.

Coding

Coding is the general term for the language used to create instructions for computerised actions. Unity uses a mixture of Csharp, Java, and Boo scripts to control its assets. For the codes controlling my piece, I have been using mostly standard unity codes or codes I have found off the internet, and tweaking them slightly to fit my needs. I have been using unity monodevelop, which is unity's own code editor, to edit my coding. The only type of coding I had tried before this project was Arduino coding, for the creation on 'Eshu' on the Inorgansims project. I then transferred my knowledge of this coding language to help me understand these new coding languages.

Orthographic Drawing

Orthographic drawing is where you draw objects with no perspective, from at least 3 different angles (top, front and side) so that you are able to recreate the shape. I did my orthographic drawings by using a graphics tablet and adobe illustrator, as to draw in vector form. This means that the lines I create are saved as lines, rather than coloured in pixels, creating a smoother overall shape. I started by tracing over the original painting in a new layer, and then drawing coloured dotted lines coming out from key points on the object in order to get a sense of proportion. I then visualised what the shape would look like from a certain angle, and drew from that. Once I had one side, I could draw more lines and repeat, eventually ending up with 3 sides of an object that fit together perfectly. Another thing abut having no perspective is that if you draw two parallel sides, eg. front and back, the images with have the exact same silhouettes but in reverse. Once I had all my orthographic drawings I could put them in as background images on the respective sides in blender, and set the view to orthographic, so that after modelling along the lines and switching back to perspective, the model should match the same shape as the painting when viewed from the correct angle.

User Input

User input is vital to interactive work, as it is what makes interactive work interactive. The main bit of user input I used throughout my project was head tracking: the screen would change to show the landscape moving as if you were moving your head inside the environment. This was coded for within unity using the Dive .sdk open dive sensor script. I also tried out three different types of further user input to try and increase the interactive nature of my piece. In unity, all interactions are controlled by coding, and all user inputs have to be coded for in different ways. I learnt to control a simple scene in unity using the bluetooth capability of a wii remote, and a simple script. This was easy enough to do as the script was already available almost in the exact form I needed it, however, trying to get it to do anything else would require a lot more knowledge and a lot more time. If I want to be able to create more effective user input I will need to vastly improve my coding skills. The other user inputs I tried were the leap motion, and internal UI using an autowalk script. The leap motion fell at the first hurdle as I couldn't find a way to even connect it to the google cardboard due to incompatibility of hardware, so that left the autowalk script. I managed to get it to work in a simple scene, but like the wii remote, I would have to improve my coding knowledge a lot before I would be able to put it to good use.

Step Outlines and Concept Art

At the start of my project, I documented my concept ideas through step outline and hand drawing. Hand drawing, though one of the simplest mediums, is one of the most effective. It's very efficient and is excellent for getting ideas on paper. By using a classic hand drawing technique of cross perspective, I started thinking about dimensions and immersion in a very simple drawing. The skills are also easily transferrable for other elements of my project, such as vector drawing for creating my guides for modelling, and drawing the graphics for my instruction sheet.

The step outline was also very helpful in getting ideas down, and again, was an easy way to start thinking about immersion. By simultaneously thinking about two different senses, I was able to better visualise what sort of piece my ideas could become, which flowed into my more solid ideas of creating virtual realities.

Stop Motion Animation

Stop motion animation both informed my piece before I started working on it, and I used it as a medium to consolidate ideas at the beginning of the project. Stop motion animation helped to inform my piece as it was the first experience I had with any sort of 3D animation. Though obviously very different to CGI 3D modelling, it helped me to think about how objects move in a 3D space, and how that can be shown on a 2D screen. This later helped to to get my head around how to make objects move in a 3D space, and show it on two 2D screens in order to create a 3D image again. Stop motion animation also helped me to realise what angles are the best for showing off 3D movement, and therefore at what angles I should place the objects in my scene in order to create the best viewing experience. Both the films I have here are stop motion animations using 2D elements to create 3D elements, through paper standing up and origami respectively. This obviously informed my piece in the way it was an early exploration of my theme of the relationship between 2D and 3D.

Tarot Card Film from Luisa Charles on Vimeo.

Working with Photography

I started working with photography as a starting point to inspire me, by collecting images of interesting and unfamiliar environments such as those in other countries, or the county side. This has helped me greatly in a number or ways on the journey to my final outcome, for example, looking at the world through a camera lens has made me realise things about framing, perspective, and parallax that I never noticed before. Both parallax and perspective are key to making things look 3D, and they become all the more apparent when looking through the lens of a camera, as it flattens the image and takes away from optical illusions. This will benefit me when trying to create a piece that bridges the gap between 2D and 3D. Also, framing is very important as my entire piece is to be viewed through a lens rather than on a screen or in real life, so the frame is essentially the only thing controlling what the environment looks like. I need to create a visually interesting first frame (the default camera position before the user turns their head) so that they are compelled to keep on looking.

I have also learn more and more about the relationship between field depth and aperture, and aperture, shutter speed, and ISO when it comes to lighting. This is very important as in 3D animation, the virtual cameras you use work in the same way as DSLRs, so learning how to use a real camera will greatly benefit me when it comes to using a virtual camera within my virtual reality.

Working with Small Circuits and Electronics

.JPG)

One of the media I decided I would like to try working with was electronics. I started by creating light-dependent light-up houses using a simple circuit, connected using conductive paint. This helped me think of interactivity being in terms of input and output, allowing me to see my piece from a more user-centric point of view. The input I chose was light levels, and would be coming from either people looking at the piece or the environment. To register the input I integrated a light-dependent resistor into my circuit, hiding it in the chimney of the house. This limits the voltage that is allowed to reach the LEDs depending on how much light is available. The output was, of course, the light given out by the houses, and came in the from of two coloured LEDs per house. In this case the input and output were similar (both using light) but in more complex pieces I could think about how to use light to control sound, or touch to control light etc. Then I needed a power source for which I used a standard 9v battery, and made a small pouch for it out of paper to ensure it didn't fall down. Finally, I used a transistor to join together the parts of the circuit and to amplify the electrical signals, and a standard resistor to control the flow of current. I will consider using more electronics when making my piece, and will think about making my circuits more complex by adding extra components, experimenting with my inputs and outputs, and adding an element of coding into the mix.

.JPG)

.JPG)

FMP Glossary

To help me organise everything I know already, and build up my knowledge and skill set by adding to it, I have created a glossary of all elements of my FMP by taking my animation glossary from the pathway stage, and adding to that terms to do with coding, app development, virtual reality, 3D cinema, interactive art, and anything else I think is relevant. I may continue with illustrating some of the points to help me understand them.

3D

Everything that is built inside a 3D software as a virtual 3-dimensional object, even though once it is rendered it is viewed as a 2D image on a 2D screen. The difference between 2D and 3D is the method of creation - 2D is made up of lines on a plane with 2 axis, and 3D is made up of objects in a space with 3 axis.

Action

Action

A description of something that an Intent sender wants done. An action is a string value assigned to an Intent. Action strings can be defined by Android or by a third-party developer. For example, android.intent.action.VIEW for a Web URL, or com.example.rumbler.SHAKE_PHONE for a custom application to vibrate the phone.

Activity

A single screen in an application, with supporting Java code, derived from the Activity class. Most commonly, an activity is visibly represented by a full screen window that can receive and handle UI events and perform complex tasks, because of the Window it uses to render its window. Though an Activity is typically full screen, it can also be floating or transparent.

Activity Canvas

A drawing surface that handles compositing of the actual bits against a Bitmap or Surface object. It has methods for standard computer drawing of bitmaps, lines, circles, rectangles, text, and so on, and is bound to a Bitmap or Surface. Canvas is the simplest, easiest way to draw 2D objects on the screen. However, it does not support hardware acceleration, as OpenGL ES does. The base class is Canvas.

adb

Android Debug Bridge, a command-line debugging application included with the SDK. It provides tools to browse the device, copy tools on the device, and forward ports for debugging. If you are developing in Eclipse using the ADT Plugin, adb is integrated into your development environment.

Animatic

A story board that is filmed, created digitally, or shown in a slide show with timings to create a flowing series of images that represent a film or animation. In its most complex form, some small elements may be simply animated, or camera movement such as panning and tracking shots may be used across the still image. Also referred to as a “leica reel”.

Anticipation

An action has three stages: anticipation, action, and reaction. Anticipation of an action is used to draw attention to it. A character makes movement to show that they about to do an action, for example, leaning one way before running in the opposite direction. An anticipation can be followed by nothing, or a different action from what we expect, in which case it has a comic effect. Using huge anticipation for a run for example, and then only showing some reaction like the dust off of the character that just zipped out of screen, makes the action clear, even though we actually didn’t see the action at all.

Appeal

Quality of character animation. How they attract the audiences eye due to how they are drawn, the quality of movement, personality, design, acting, etc.

.apk file

Android application package file. Each Android application is compiled and packaged in a single file that includes all of the application's code (.dex files), resources, assets, and manifest file. The application package file can have any name but must use the .apk extension. For example: myExampleAppname.apk. For convenience, an application package file is often referred to as an ".apk".

Application

From a component perspective, an Android application consists of one or more activities, services, listeners, and intent receivers. From a source file perspective, an Android application consists of code, resources, assets, and a single manifest. During compilation, these files are packaged in a single file called an application package file (.apk).

An articulation’s path of action is normally an arc, unless we animate something with jerky movement like a robot. Arcs are generally more or less round, and can turn more or less abruptly. Keeping animation on clean arcs creates flow, realism, logic, and an overall more pleasing animation.

Articulation

A character’s joints are known as their articulations. They can have 1, 2, or 3 degrees of rotation freedom (left /right, up/down, and twist), and can have any amount of flexibility.

Asset

All of the digital pieces which make up a game are known as 'assets'. A texture map, a model file, or a file on disk that tells how much health your character begins the game with are all examples of assets. Unity stores assets in the Project Folder

Bake

Calculating and storing complex computations ahead of time is known as 'baking'. Lightmaps, for example, are 'baked' diffuse illumination. Baked data is usually more efficient to compute (thus better for CPU performance) but uses a lot of memory.

Bone

The hierarchy of transforms that make up a skinned model are called bones. Bones are usually animated outside of Unity but they can also be controlled by scripts.

Breakdown

When working pose to pose, the highest level of poses are the key poses, the keys are further detailed with extremes, and then further detailed with breakdown poses (and at the lowest level is the inbetween). Breakdowns, also known as 'passing' or 'middle' positions describe smaller bits of movement. An arc for instance can have two extremes and then, to further define it, you add one or more breakdowns. For example, if the extremes were left and right, the breakdown could be a middle “extreme”.

Breaking joints

Creating flexibility of articulations by bending joints in the opposite direction than is realistically possible, that would break it in the real world. This is used for creating the illusion of a curvy limb, waving like a flexible piece of rubber.

Broadcast Receiver

An application class that listens for Intents that are broadcast, rather than being sent to a single target application/activity. The system delivers a broadcast Intent to all interested broadcast receivers, which handle the Intent sequentially.

Budget

Games are limited by available memory and computing power. If the game demands too much memory (for example, because it's trying to draw a very complex 3d scene) or two much processing power (if it is attempting some extremely difficult calculations) it will slow down. For this reason most game teams set a budget for the amount of memory and computation they can use at a given time. Learning how to live within a budget is one of the keys to creating a game which plays smoothly.

Camera cut

An interruption of the sequence of images by changing to another sequence of images, separating two shots.

Cg

Computer graphics. Everything that is drawn or built on, or by, a computer, that has a visual, graphical outcome and is used in film.

Claymation

A particular type of stop-motion animation that uses clay as a medium. Due to its mouldable nature the same piece of clay can be used for multiple frames by changing it slightly, instead of having a new model for each frame.

Collider

By default, gameObjects in Unity are visible but not tangible: they don't affect each other and aren't affected by gravity or other forces. A collider is a component which makes an object tangible to the physics system. Colliders are usually much simpler shapes than the objects they are attached to, which makes the physics calculations run faster.

Color grading

Same as color correction. The process of altering an image's color balance. For example, turning a color picture into sepia, or desaturating it.

Color script

A tool for organizing the overall color design for every scene in a movie. The color script is just a series of images, usually paintings, each of them representing the color mood of a certain scene.

Component

Components are nuggets of code which define how your game will play. Components are attached to one or more GameObjects; each component usually only affects the object to which it is attached.

Compositing

Components are nuggets of code which define how your game will play. Components are attached to one or more GameObjects; each component usually only affects the object to which it is attached.

Compositing

The process of adding layers of images to create a final image. It normally applies to working with image sequences that are rendered from 3D in separate layers. Also, compositing can be used to put together layers of real film output and photography, in order to create a final image sequence. Or mix the both media.

Console

The Console is a Unity panel which displays messages from Unity or from your game code. It tells you when errors occur but is also a useful way to print out information as you're experimenting with new ideas. The most recent console messages is also displayed at the bottom of the main Unity window, even if the console is closed.

Contact

A contact position is used when a character “takes off” or “lands”, basically when it just comes in contact with something, or just about to leave (so it’s not necessarily foot contact, but can be hand, head, anything). Used many times as a stretched pose, in cartoon animation, the contact helps the flow of images by connecting the object about to reach (or just departing from) a target… with the target. This contact kills strobing by filling empty space. Even though the contact position is many times a weird overstretched exaggerated pose, it flows so quick it can’t be seen, just felt, and it gives an organic continuity to the motion - it also helps describing a path of action. In a walk, the contact is the position where the foot touches the ground but has no weight on it yet (see path of action;strobing; weight).

Content Provider

A data-abstraction layer that you can use to safely expose your application's data to other applications. A content provider is built on the ContentProvider class, which handles content query strings of a specific format to return data in a specific format.

Coordinates

3-D space is defined by the X,Y and Z axes. A location in 3-d space can be specified with 3 numbers (in the order XYZ). So (0,0,0) represents to the center of a scene, (1,0,0) a point 1 unit to the left of the center, and so on. When transforms are parented to each other, the child transforms are oriented around the center of their parent: as far as a child is concerned (0,0,0) is the location of the parent object and not the center of the world.

Counteraction

Basically, as the body moves, the loose parts of the body such as hair or breasts or belly fat or clothes, they all stay behind and tend to move in the opposite direction (not to mistake for follow through which is the action of those body parts AFTER the movement has come to a stop; also, not to mistake for secondary action or overlapping)(see follow through; overlap; secondary action).

Dalvik

DDMS

Dalvik

The Android platform's virtual machine. The Dalvik VM is an interpreter-only virtual machine that executes files in the Dalvik Executable (.dex) format, a format that is optimized for efficient storage and memory-mappable execution. The virtual machine is register-based, and it can run classes compiled by a Java language compiler that have been transformed into its native format using the included "dx" tool. The VM runs on top of Posix-compliant operating systems, which it relies on for underlying functionality (such as threading and low level memory management). The Dalvik core class library is intended to provide a familiar development base for those used to programming with Java Standard Edition, but it is geared specifically to the needs of a small mobile device.

DDMS

Dalvik Debug Monitor Service, a GUI debugging application included with the SDK. It provides screen capture, log dump, and process examination capabilities. If you are developing in Eclipse using the ADT Plugin, DDMS is integrated into your development environment.

.dex file

Android programs are compiled into .dex (Dalvik Executable) files, which are in turn zipped into a single .apk file on the device. .dex files can be created by automatically translating compiled applications written in the Java programming language.

Dialog

A floating window that that acts as a lightweight form. A dialog can have button controls only and is intended to perform a simple action (such as button choice) and perhaps return a value. A dialog is not intended to persist in the history stack, contain complex layout, or perform complex actions. Android provides a default simple dialog for you with optional buttons, though you can define your own dialog layout. The base class for dialogs is Dialog.

Diffuse

Diffuse lighting (as opposed to specular lighting) refers to the rendering of matte objects. Diffuse lighting depends entirely on the angle between the surface normal of an object and the light - it looks the same regardless of the viewer's position. Many shaders use the terms 'diffuse map', 'color map' and 'surface color' interchangeably.

Down position

Also called a low position. In a walk or a run, it's the lowest pose, with weight on it.

Drawable

A compiled visual resource that can be used as a background, title, or other part of the screen. A drawable is typically loaded into another UI element, for example as a background image. A drawable is not able to receive events, but does assign various other properties such as "state" and scheduling, to enable subclasses such as animation objects or image libraries. Many drawable objects are loaded from drawable resource files — xml or bitmap files that describe the image. Drawable resources are compiled into subclasses of android.graphics.drawable.

Extreme

The start and ending point of an action, of an arc for instance. In a walk, the extremes are the down/low and the up/high positions, in an arm swing the extremes are the most forward and the most backwards positions (see arcs; down position; up position).

FBX

A common format for storing 3d model files.

Figure 8

A particular type of arc that can be very successfully used for turns (but not only!) - basically, an articulation goes for example up and left and makes a right turn and then it comes back down and left, so it intersects the path it followed when it went up - a figure 8 can be very thin and barely look like an eight, and it can be incomplete, the turn is the idea. It’s always better to use a figure 8 and not a straight line with a sharp turn if animating a living thing (see arcs).

Follow through

Auxiliary elements of the body, like tails, floppy ears, clothing, etc, that are soft and flexible, do not come to a stop at the same time with the rest of the body, but follow through… and settle after a while (not to be mistaken for secondary action, which is conscious movement, nor for counteraction, which is about the dragging, while follow through is about the settling)(see counteraction; overlap;secondary action).

Fps

Frames per second, the unit of frame rate.

Frame

An image in a succession of images (that when played back give the illusion of movement).

Frame handles

Extra frames added at the beginning and/or end of a shot, also called cutting frames in the UK (see shot).

Frame rate

The number of frames per second. Animation, like film, works at 24 fps (see frame).

Hard accent

Simply put: a bounce. You “hit” and change direction (see soft accent).

GameObject

Any object - for example, a model, a light, or a camera -- is a GameObject. Other asset types which don't get physically placed into the game on their own (such as textures or animations) are not considered gameObjects. Only GameObjects can have Components.

Game Window

A 3d view showing the game from the perspective of the currently active game camera.

Global Transform

Refers to a transform in absolute space. For example, a plate on a 3 foot high table may have a local transform height of zero if parented to the table -- but it's global transform height will be 3 feet. In Unity scripts we use transform.position, transform.rotation andtransform.scale to access the global transform.

GameObject

Any object - for example, a model, a light, or a camera -- is a GameObject. Other asset types which don't get physically placed into the game on their own (such as textures or animations) are not considered gameObjects. Only GameObjects can have Components.

Game Window

A 3d view showing the game from the perspective of the currently active game camera.

Global Transform

Refers to a transform in absolute space. For example, a plate on a 3 foot high table may have a local transform height of zero if parented to the table -- but it's global transform height will be 3 feet. In Unity scripts we use transform.position, transform.rotation andtransform.scale to access the global transform.

Hierarchy Window

Shows all of the objects in your current Unity scene, arranged in an outliner like indented view

Hold

A character striking a pose that’s a simple drawing with no animation on it. If there is very subtle animation, it’s not a hold anymore, it’s a moving hold (see moving hold).

Import

To bring an asset into Unity. Files cam be imported through the import menu, or by file drag-and-drop in the project folder. If you update a file already in the project folder, Unity will automatically re-import it.

Inbetween

The smallest unit of an animation. Keys are further detailed with extremes, extremes are being broken down with…. breakdowns, and breakdowns are being broken down with inbetweens. The inbetweens in handdrawn animation are indicated by the animator, and drawn by the inbetweener. On a computer, the animator can use computer inbetweening - and use all kinds of interpolations in between keyframes (see extreme; interpolation; key; keyframe).

Intent

An message object that you can use to launch or communicate with other applications/activities asynchronously. An Intent object is an instance of Intent. It includes several criteria fields that you can supply, to determine what application/activity receives the Intent and what the receiver does when handling the Intent. Available criteria include include the desired action, a category, a data string, the MIME type of the data, a handling class, and others. An application sends an Intent to the Android system, rather than sending it directly to another application/activity. The application can send the Intent to a single target application or it can send it as a broadcast, which can in turn be handled by multiple applications sequentially. The Android system is responsible for resolving the best-available receiver for each Intent, based on the criteria supplied in the Intent and the Intent Filters defined by other applications.

Intent Filter

Interpolation

The way a computer calculates the path between 2 points. There can be many types of interpolations, such as linear, spline, stepped, etc, and sometimes they can be named differently in different software. Linear, as the name says it, calculates a simple, straight line between the points. The spline is a curve, and there are many types of curves that can be calculated between points, usually using manually editable tangents. Stepped is a direct jump from one point to another, without calculating any interpolation (it holds the value of point one until it's time to go to point two, and then jumps).

Inspector

The Unity inspector window is used to examine or set the properties of gameObjects and assets

Key

One of the few and most important poses in a shot, which is where its name comes from: you have a drawing on a frame that’s ‘key’ to the entire length of the shot or part of that shot. An entire shot can easily revolve around one or two keys. A key pose usually captures an expression, so I like to call it an expression pose (also called main or “golden” pose).

Keyframe

In computer animation, to keyframe an object or articulation means to lock it in time and space. A keyframe is a visual cue for that specific moment where that specific articulation is placed.

Layout

Pre-animation stage where you arrange the characters, cameras, props, all the objects in your scene that need to be ready for animation.

Layout Resource

An XML file that describes the layout of an Activity screen.

Lightmap

A lightmap is a texture which stores pre-computed diffuse lighting for a scene. This allows the computer to skip many repetitive lighting calculations (at the cost of more memory). Lightmaps are often used to create the illusion of shadows.

Line of action

A lightmap is a texture which stores pre-computed diffuse lighting for a scene. This allows the computer to skip many repetitive lighting calculations (at the cost of more memory). Lightmaps are often used to create the illusion of shadows.

Line of action

An imaginary line that you can draw along the character’s pose. A character’s body posture flows along this imaginary line of action. Flexible and dynamic line shapes tend to be arched (C-like) or S-like, with the legs and hands either flowing along the line or opposing it. Natural/realistic body poses are also many times based on C and S shapes, but are more diverse though, and also may be less dynamic, with less visual impact.

Lipsync

Animating the mouth (so it actually includes the tongue as well, not only the lips) to synchronize with the speech on the soundtrack.

Local TransformRefers to a transform that is defined relative to another. For example, a plate on a 3 foot high table may have local transform height of 0 if it is parented to table - even though it's absolute position is still three feet above the zero plane. In Unity scripts we use transform.localPosition,transform.localRotation and transform.localScale to access the global transform.

Local TransformRefers to a transform that is defined relative to another. For example, a plate on a 3 foot high table may have local transform height of 0 if it is parented to table - even though it's absolute position is still three feet above the zero plane. In Unity scripts we use transform.localPosition,transform.localRotation and transform.localScale to access the global transform.

Manifest File

An XML file that each application must define, to describe the application's package name, version, components (activities, intent filters, services), imported libraries, and describes the various activities, and so on.

MeshA mesh is a collection of vertices or 3D point. The computer draws the mesh as a collection of facets or polygons

Moving hold

In computer animation especially, holds are moving holds, meaning that you can’t simply freeze the character into a pose or it will look very dead… A moving hold is basically very subtle animation on a character that doesn’t move, or moves very little (see hold).

Nine-patch / 9-patch / Ninepatch image

A resizeable bitmap resource that can be used for backgrounds or other images on the device.

NormalThe direction which a 3D polygon faces is it's 'normal'. It's mathematically expressed as a 3D vector. Normals are used to calculate light and shading, to figure out if objects are visible from behind, and to calculate the effects of physics collisions.

Ones

Animation “on ones” means a drawing (or image render) per each frame. In handdrawn animation this is usually used in fast and/or detailed, intricate motion. Computer animation renders everything frame by frame, so normally there is no “ones versus twos” battle (see twos).

OpenGL ES

Android provides OpenGL ES libraries that you can use for fast, complex 3D images. It is harder to use than a Canvas object, but better for 3D objects. The android.opengl andjavax.microedition.khronos.opengles packages expose OpenGL ES functionality.

Delaying different bodyparts or any animated elements so you don’t have everything hitting an accent or coming to a stop on the same frame. (see counteraction; follow through; secondary action).

Overshooting

Going over the limits of an extreme, or of any pose, and then coming back to that pose - to give it an extra punch.

ParentTransforms can be linked so that one transform is relative to another, and moves when the other moves: for example, moving your shoulder causes your elbow to move, moving your elbow causes your wrist to move, and so on. The transform which causes the other to move is known as the "parent", any transforms which are moved by a parent are "children". A parent can have many children, but each child has only a single parent. We also refer to the act of linking transforms in this way as "parenting".

Path of action

Any animated object, or body, or articulation, travels along a path of action, which is usually an arc. Only robots or mechanical things can have truly linear movements, but for organic life or just about anything found in nature, when in movement, its paths of action will be arched (see arcs;articulation).

Polygon3 or more vertices which form a planar facet than can be rendered in 3D.

Pose

A pose, or position, or a drawing, is basically what it sounds like - positioning (or drawing) the body (and facial expression maybe) to describe/express something; (see pose to pose animation).

Pose to pose animation

A method of animating: the animator sets layers of poses and works in an organized fashion by breaking down the movement in more and more detail. The highest layer is that of the key poses, then come the extremes, then breakdowns (as many layers as needed - some people prefer to add layers and keep breaking things down in more and more detail), and finally, the lowest, more mechanical level, of the inbetweens (this method tends to be too rigid, it’s ideal to combine it with straight ahead animation and work straight ahead between the main poses, that I like to call “pillars”)(see pose; straight ahead animation).

Postproduction

Everything there is to do after the actual production. There is color grading, editing, sound design… last minute panic, all that jazz. Also, in vfx, postproduction means all that is done after the actual shooting, so everything related to cg vfx would be postproduction (see cg; color grading; jazz;preproduction; production; shooting; vfx).

PrefabStores a collection of GameObjects, along with any components, materials, textures and settings. Prefabs are stored as ".prefab" files in the project folder.

Preproduction

Preproduction

Preparations for the film - that include research, using inspirational artwork, designing characters, sets, props, creating a color script, developing the story, creating a storyboard and upgrading it to an animatic, etc. In the case of real film, preproduction also includes creating props and sets, since production only involves actual shooting (see animatic; color script; postproduction; production;prop; set; shooting; storyboard).

Production

The actual work on the film once the preproduction stage is done. In a 3D pipeline, for example, it means modeling, texturing, shading, rigging, dynamic simulations, lighting and rendering. In film, production is considered only the actual shooting process (see postproduction; preproduction;shooting).

Progressively breaking joints

We apply the breaking joints technique to an entire chain. Joints in a flexible chain follow a leader who’s generating the motion, and the motion propagates through this chain in such a way that 2 follows 1, 3 follows 2 and so on. It’s the overlap principle applied to a chain of breaking joints (seebreaking joints).

Project FolderEvery unity game defines a 'Project Folder' where all of the assets are stored. Anything inside the project folder is available to the game. Usually the project folder will be stored in your Documents folder. Since all assets live in the project folder, we usually refer to them by relative paths such as Assets/levels/intro.unity orAssets/textures/bowling_pin.tif.

Project WindowThe project window shows all of the assets in your project folder

Prop

Object used by a character.

Reaction

An action has three stages: anticipation, action, and reaction. An action can be followed by a reaction to that action, and this reaction can be that of the charactes, of the ambient, of an object… (like if you fly and hit a wall, the hit is a reaction). Reaction can be used with great effect in film when you have multiple layers of reaction (you hit a wall, the wall fractures, you fall down, the wall fractures some more, the wall collapses on top of you, the entire construction that was held by that wall collapses, the entire city is left in darkness because the construction was an electrical power plant or something… and so on, an entire chain of events) (see anticipation).

Resources

Nonprogrammatic application components that are external to the compiled application code, but which can be loaded from application code using a well-known reference format. Android supports a variety of resource types, but a typical application's resources would consist of UI strings, UI layout components, graphics or other media files, and so on. An application uses resources to efficiently support localization and varied device profiles and states. For example, an application would include a separate set of resources for each supported local or device type, and it could include layout resources that are specific to the current screen orientation (landscape or portrait). The resources of an application are always stored in the res/* subfolders of the project.

Rotoscoping

Copying movement by tracing video frame by frame (helpful sometimes...). Also, used in compositing to create masks (see compositing).

SceneAka a "level." The basic work unit in Unity; a collection of gameObjects and their associated components. Scenes are saved in the project folder as files with a ".Unity" extension.

Scene

Normally referred to as a sequence of shots where all the action happens within a given space and time, with no jumps to a different space and/or time.

Scene WindowA 3d view showing your currently opened scene file.

ScriptA small, modular piece of computer code which controls one aspect of Unity game. Most components are created by scripts, and people will occasionally use the terms interchangeably.

Scene WindowA 3d view showing your currently opened scene file.

ScriptA small, modular piece of computer code which controls one aspect of Unity game. Most components are created by scripts, and people will occasionally use the terms interchangeably.

Secondary action

Small auxiliary movements meant to enrich the main action, but ideally... not distract from it. Fingers playing, shoulders going back and forward, tail animation, ears animation (but not when these are being simply dragged or pushed or settling, that’s counteraction and follow through), blinks, etc (see counteraction; follow through).

Service

An object of class Service that runs in the background (without any UI presence) to perform various persistent actions, such as playing music or monitoring network activity.

Set

The happy place where the action happens. In 2D there might be no set if all there is is painted backgrounds, but in 3D or stopmotion you need to build the set from the ground up (unless, again, you use background images).

Shot

The smallest fragment of a film, the amount of film rolling in between two camera cuts (see camera cut).

Shooting

The process of recording images on film using a real camera. Usually nobody gets hurt.

SkinningSkinning is a way of allowing several different transforms (or 'bones' to move the vertices of a model. This allows for models which deform realistically rather than hard mechanical surfaces.

SkinningSkinning is a way of allowing several different transforms (or 'bones' to move the vertices of a model. This allows for models which deform realistically rather than hard mechanical surfaces.

Slow in

Also referred to as “ease in”. A movement that starts slower and then accelerates is a movement with a slow in (see slow out).

Slow out

Also referred to as “ease out”. A movement that slows down towards its end is a movement with a slow out (see slow in).

Soft accent

An accent created by a moving object or body or articulation without changing the direction of action but simply alternating timing and spacing - introducing fast movement in slow action and viceversa. The contrast doesn’t need to be significant to be noticeable. Basically, you “hit” and continue in the same direction (see hard accent; timing and spacing).

Specular

Specular lighting refers to the lighting calculations for shiny or glossy materials such as plastic or metal. Unlike diffuse lighting the size and intensity of specular lighting changes dependent on the position of the viewer as well as the position of the lights and the surface normals

Squash and stretch

It means two things: 1. squashing and stretching a body - to enhance a movement and make the character more flexible (rigid parts of the body, like the cranium, should normally be kept rigid); 2. squashing and stretching a pose - this doesn’t involve any deformations, but being aware of them while posing enhances movement. Life is a continuous squash and stretch.

Stopmotion animation

Animation with real-world puppets, where the camera takes a picture and then the animators rearrange the puppet, the camera takes another picture, and so on. Basically, we have a camera that is “stopped at every frame” (also called stopframe animation, stopmo, claymation - when the puppets are clay sculptures).

Storyboard

A tool for planning film. A sequence of images, usually rough drawings on small pieces of paper, pinned to a board, visually describing a story. Each image describes an event. So a shot could be described with just one, or several images. The storyboard is often used during the development of the story, in the preproduction of a film. It’s easy to change story structure by rearranging the drawings pinned on the board, making new drawings, etc. (see animatic; shot).

Straight ahead animation

A method of animating: the animator works frame by frame in a continuous creative flow. This method tends to be too loose, unfocused, and hard to control in an animation production. It’s ideally combined with pose to pose animation: the animator works straight ahead between the main poses)(see pose; pose to pose animation).

Strobing

Flickering animation, due to poses that don't seem connected from one frame to another. If the distance is too great, and there is no motion blur or cartoon stretch to overlap the position of the object in successive frames, the eye perceives a break in the flow of images. Panned backgrounds can produce this jitter at certain speeds, but not all images seem to produce jitter - it depends on softness, color, contrast. There may be other reasons too. For images to flow well one into another they have to be connected in such a way that the eye doesn’t read any obstruction, or break, or unexpected change.

SurfaceView

TextureA 2-d bitmap image that is applied to a 3d model.

Theme

Surface

An object of type Surface representing a block of memory that gets composited to the screen. A Surface holds a Canvas object for drawing, and provides various helper methods to draw layers and resize the surface. You should not use this class directly; use SurfaceView instead.

SurfaceView

A View object that wraps a Surface for drawing, and exposes methods to specify its size and format dynamically. A SurfaceView provides a way to draw independently of the UI thread for resource-intensive operations (such as games or camera previews), but it uses extra memory as a result. SurfaceView supports both Canvas and OpenGL ES graphics. The base class is SurfaceView.

TextureA 2-d bitmap image that is applied to a 3d model.

Theme

A set of properties (text size, background color, and so on) bundled together to define various default display settings. Android provides a few standard themes, listed in R.style (starting with "Theme_").

Timing and spacing

Abstract concepts created by animators to be used as animation tools.

Timing is easier to explain, it represents how much time passes between two keys.

Spacing means what happens between the two keys: if the movement is even, if it accelerates, or if it slows down. Basically, if there is more space between poses, visually, the object will move faster. And the other way around. So spacing relates to time more than space, and is actually a timing tool, because the difference in spacial positioning creates a difference in speed.

Transform

The collection of movement, rotation and scale which defines an object's position in space is known as its transform. Transforms can be either local or global.

Trigger

A collider with the IsTrigger checkbox turned on acts a trigger rather than a physics volume. Scripts can detect when a gameObject enters a trigger -- for example, a character walking into a trigger could spring a trap, or a ball entering a score trigger could give the player points.

Twos

The collection of movement, rotation and scale which defines an object's position in space is known as its transform. Transforms can be either local or global.

Trigger

A collider with the IsTrigger checkbox turned on acts a trigger rather than a physics volume. Scripts can detect when a gameObject enters a trigger -- for example, a character walking into a trigger could spring a trap, or a ball entering a score trigger could give the player points.

Twos

Animation “on twos” means a drawing (or image render) for every two frames. The drawings get duplicated, which means half the number of drawings. In handdrawn animation this is usually used in normal and slow motion. Stopmotion also uses twos (see ones).

Up position

Also called a high position. In a walk or a run (etc) it is the highest pose, with the weight traveling upwards, maybe being thrown up in the air by the body movement.

Update LoopSeveral times a second, the game will tell all of the active GameObjects and components to update themselves. This causes the scripts attach to each component to run their Update code, which can make objects move or take actions.

URIs in Android

Update LoopSeveral times a second, the game will tell all of the active GameObjects and components to update themselves. This causes the scripts attach to each component to run their Update code, which can make objects move or take actions.

URIs in Android

Android uses URI strings as the basis for requesting data in a content provider (such as to retrieve a list of contacts) and for requesting actions in an Intent (such as opening a Web page in a browser). The URI scheme and format is specialized according to the type of use, and an application can handle specific URI schemes and strings in any way it wants. Some URI schemes are reserved by system components. For example, requests for data from a content provider must use the content://. In an Intent, a URI using anhttp:// scheme will be handled by the browser.

VertexA vertex (pl. 'vertices') is one point of a 3d mesh. The visible surface of a model is drawn by the computer as a series of facets connecting vertices.

Vfx

Vfx

Visual effects. Everything that needs to be added to real film shooting in order to create final imagery. This can include 2D, 3D graphics, compositing, color grading, etc (see 2D; 3D; color grading;compositing)

View

An object that draws to a rectangular area on the screen and handles click, keystroke, and other interaction events. A View is a base class for most layout components of an Activity or Dialog screen (text boxes, windows, and so on). It receives calls from its parent object (see viewgroup, below)to draw itself, and informs its parent object about where and how big it would like to be (which may or may not be respected by the parent).

Viewgroup

A container object that groups a set of child Views. The viewgroup is responsible for deciding where child views are positioned and how large they can be, as well as for calling each to draw itself when appropriate. Some viewgroups are invisible and are for layout only, while others have an intrinsic UI (for instance, a scrolling list box). Viewgroups are all in the widget package, but extend ViewGroup.

Weight

Any body or object has a certain weight, that we can read/perceive/understand from its movement and its interaction with other bodies or objects. A heavy object for example is hard to move, a heavy body in movement will put more effort into fighting gravity. A heavy object or body is hard to start and hard to stop. Each step set by a heavy character is going to leave the ground for a very little amount of time and come back to the ground really fast, so the illusion of weight is mainly created with timing. A heavy box is hard to lift, so the character has to position itself in such a way as to accommodate that difficult task, and also think about the action before doing it. In this case we help achieving the illusion of weight through attitude and posing.

Weight shift

The weight of a body in motion shifts from one part of the body to another as the body changes the center of balance. For instance in a walk we keep shifting the weight from one foot to another. Being aware of where the weight is and how it shifts is essential to creating believable movement. If the character, for example, is out of balance because its weight has shifted on its left, and the left foot is in the air, and the animator holds that pose without the character collapsing, it’s wrong. When cartoons purposefully defy the laws of physics for the sake of humor, we can accept that because we understand that context. But in most cases, for creating believable movement, you need to abide to the laws of physics. Also, for believability in unrealistic situations, even if a cartoon character might literally walk on air because they didn’t read about gravity yet, that walk still needs proper weight.

Widget

One of a set of fully implemented View subclasses that render form elements and other UI components, such as a text box or popup menu. Because a widget is fully implemented, it handles measuring and drawing itself and responding to screen events. Widgets are all in the android.widget package.

Window

In an Android application, an object derived from the abstract class Window that specifies the elements of a generic window, such as the look and feel (title bar text, location and content of menus, and so on). Dialog and Activity use an implementation of this class to render a window. You do not need to implement this class or use windows in your application.

XYZ

The coordinate system for a 3-d space has three main directions, or 'axes'. In Unity, the ground plane is defined by the X and Z axes, and the vertical dimension is Y. The origin is the 3-d point located at X=0, Y=0, Z=0. Positions, rotations and scales are all defined for each axis, so an object might be located at (0, 10, 0) -- that is, ten units off the ground -- and rotated (45, 0, 0) -- that is, rotated 45 degrees clockwise around the X axis. By convention controls that manipulate the scene are colored coded so that red = the X axis, green = the Y axis and B = the Z axis.

Here is a list of the skills I already possess, those I am partially familiar with, and those that I cannot but would like to/need to be able to do, as of the end of the pathway stage of the course/the very start of the FMP. By the end of the FMP I hope to have greatly added to this list, especially in the 'proficient' section, with the new skills I gain whilst exploring on my personal, self directed project.

Things I can do/use:

- Draw

- Paint

- Microsoft Paint

- Final Cut Pro

- iMovie

- Make Flip-books

- Hand-drawn animation on acetate

- Photoshop

- Premier Pro

- Photography